For several weeks now there has been growing evidence that Twitter is being used for a covert and highly organised propaganda operation which disparages Shia Muslims while supporting the Sunni Muslim governments of Saudi Arabia and Bahrain.

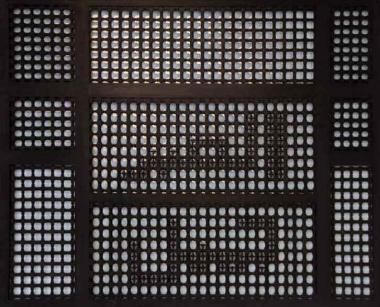

The tactic is to deluge Twitter with multiple copies of identical tweets. These come from fake (robotic) accounts which are programmed to post tweets at fixed intervals. Last month Twitter closed down hundreds of suspect accounts but new ones were created. Altogether, several thousand robotic accounts are involved in the operation though they are not all active simultaneously.

Besides inflaming sectarian tensions in the region, the apparent purpose is to obstruct genuine discussion on certain topics by swamping the relevant hashtag with automated tweets. The automated tweets often link to YouTube videos which have no connection with the topic.

The latest example – uncovered by Marc Owen Jones, who lectures in Gulf politics at Tübingen University in Germany – seems aimed at stifling criticism by Twitter users of Saudi Arabia's economic aid package for Egypt.

Last Monday @htksa, a genuine Twitter account, posted a tweet in Arabic which said: "Saudi aid to solve the dollar crisis within days" and added the hashtag #السعودية_تدعم_مصر_مليار_دولار which translates as "#saudi_supports_egypt_billion_dollars". Others soon joined in under the same hashtag, asking critical questions such as why the kingdom is sending money abroad when its own economy is suffering from low oil prices and unemployment is high.

Then the robots arrived, inundating the hashtag with spam. The following day Marc Owen Jones gathered API data from Twitter and found 11,847 tweets using the hashtag over a sample two-hour period. More than 10,000 of these – around 90% – almost certainly came from robot accounts. Among these there were only 30 different tweets: the same words were being repeated by multiple accounts. In one case, a tweet had been posted from 799 accounts.

Suspicions were first aroused in June when Sheikh Isa Qasim, a prominent Shia cleric, was stripped of his citizenship by Bahrain's rulers. Human Rights Watch condemned the Bahraini government's move but on Twitter large numbers of tweets appeared attacking Qasim. Marc Owen Jones found 219 of them with exactly the same wording – all apparently coming from different Twitter accounts. After further investigation he concluded they were automated tweets from robotic accounts.

Meanwhile, something similar was happening with the #bahrain hashtag. Monitoring of API data over a 12-hour period on June 22 revealed 10,887 #bahrain tweets – of which slightly more than half appeared to be robot-generated.

But how do we now they ae robots? First, the accounts have been created in a series of batches, typically over a period of several days, and the accounts in each batch have similar characteristics. They tend to follow Saudi media accounts and the few followers they have are usually other suspect accounts. They are hardly ever retweeted and do not interact with genuine Twitter users.

If you look carefully at the individual accounts it soon becomes clear they are not real. One batch of 40-plus accounts created in February this year have vague biographical notes often mentioning Allah which, to quote Marc Owen Jones, seem intended "to convey a sense of pious zeal, or an image of a 'good Muslim'."

The accompanying profile photos appear to have been plucked at random from the internet. For example, an account supposedly belonging to someone called Warhan Jamali uses a photo of Dr Sami bin Abdullah al-Salih, a Saudi diplomat currently serving as the kingdom's ambassador in Algiers. Another account, quoting the Prophet in its bio, apparently belongs to a religious gentleman called Mazher al-Jafali but its profile picture comes from the Facebook page of Omar Borkan al-Gala, an Iraqi-born male model living in Vancouver.

A key difference between human Twitter accounts and robotic accounts is that humans usually tweet when motivated to do so, but robots have to tweet at pre-set times. Obviously, a robot that tweeted every hour, on the hour, would soon be spotted – but these robots are clever. They tweet for a few hours then take a rest. When they are active the timing of their tweets follows a fixed pattern. There are various patterns and they are all quite complex. One robot, for example, was found to be posting two tweets four seconds apart, at intervals of 12 minutes 20 seconds.

So far, it's not known who is behind this. Setting up such a system on such a grand scale would require a lot of programming work and, despite the automation, a good deal of human intervention to keep it running. It would mean constant monitoring of Twitter to determine which hashtags to attack with spam.

This suggests the operation is either connected to some government agency or perhaps an individual sponsor with money to spare and an axe to grind.

Although the spamming is an an irritation and interferes with political debate, a more sinister aspect – especially if there's a government connection – is that the robotic accounts are also tweeting hate speech, using derogatory and abusive language to associate Shia Muslims with violence and terrorism. This can only inflame sectarian tensions in an aleady tense region.

RSS Feed

RSS Feed